Friday, 27 October 2017

Gender stereotyping used in the media for the recruitment of women to the British Army

Friday, 20 October 2017

Westminster Student Blog Series

Public Sphere in the case of the Women's March on London

Author: Tian

A student of the Frankfurt School of Social Research, Jürgen Habermas wrote The Structural Transformation of the Public Sphere (1962) to explore the status of public opinion in the practice of representative government in Western Europe.

The feminist critique points out that the ‘public sphere’ has proposed a sphere of educated, rich men, juxtaposed to the private sphere that has been seen as the domain of women, which also excluded gays, lesbians, and ethnicities (Fraser, 1990). The criticism has also suggested that a democratic and multicultural society should based on the plural public arenas (Fuchs, 2014). Habermas agrees that his early account in The Structural Transformation of the Public Sphere, published in 1962, has neglected proletarian, feminist and other public spheres (Fuchs, 2014).

The recent example would be the success of Women’s March. The US election proved a catalyst for a grassroots movement of women to assert the positive values that the politics of fear denies. The Women’s March was held in order to represent the rights of women and solidarity with participants from the threatened minorities such as Muslim and Mexican citizens and the LGBTQ community. There were hundreds of marches have taken place in the major cities around the world.

The video would criticize the concept of ‘pubic sphere’ from both feminist perspective and the emerging of social media. Discuss how the rise of social networking sites has resulted in public discussions on digital platform.

Reference:

Fraser, N. (1990). Rethinking the Public Sphere: A Contribution to the Critique of Actually Existing Democracy. Social Text, (25/26), p.56.

Fuchs, C., (2014). Social media. A critical introduction. London: Sage.

Monday, 16 October 2017

Social media research, ‘personal ethics’, and the Ethics Ecosystem

Friday, 29 September 2017

Westminster Student Blog Series

The Internet as Playground and Factory

Author: Remigijus Marciulis

In recent years, the labour theory of value has been a field of intense interest and debates, particularly the use of Marxist concepts in the digital context. There are straight facts showing that giant online companies like Facebook and Google have accumulated enormous amounts of capital by selling their users’ data to advertisers. The phenomena of a ‘Social Factory’ is discussed by different scholars. The value we create go beyond actual factory walls, including the online sphere. “The sociality is industrialised and industry is socialised” (Jarrett, 2016: 28). Trebor Scholz refers the Internet as a ‘playground’ and ‘factory’. His argument is based on the fact that being online is a part of having fun. Is it really uploading a video on YouTube counts as a digital exploitation? On the other hand, Christian Fuchs says that there is a straight connection between the time spent on the Internet and the capitalist exploitation, free labour and surplus-value. Kylie Jarrett tackles the subject from a metaphoric angle of the Digital Housewife. She applies concepts of Marx and feminist approaches by investigating the digital world. According to her, a digital or immaterial labour is profoundly exploited by capitalism.

The interview with Dr Alessandro Gandini explores subjects of digital labour and ‘playbour’, the use and appropriateness of Marxist concepts. To sum up, the subject of digital labour and exploitation is complex and diverse. It requires a more profound study that distinguishes the “real” digital work and time spent online for leisure. Scholars agree that the communicative action and activism are the main key instruments fighting against digital capitalist inequalities.

Friday, 22 September 2017

Call for speakers: Answering social science questions with social media data

- · How has it impacted policy, best practice, or understanding?

- · How has it answered a question that would have been unfeasible using conventional research methods alone?

The #NSMNSS & SRA teams

Friday, 15 September 2017

Westminster Student Blog Series

Digital Review: Public Sphere and the Exclusion of Women

Author: Karolina Kramplova

The creation of new forms of digital social media during the first decade of the 21st century has completely changed the way in which many people communicate and share information. When we think about social media as a space where the public can discuss current affairs and politics, it is interesting to consider it with the theory of public sphere. Ever since Habermas established this concept, it was criticised by scholars like Nancy Fraser. She argues that the theory was established based on a number of exclusions and discriminations. I focused on the exclusion of women from the political life. Andy Ruddock, an author of the book, Youth and Media, also talks about the lack of representation of women in subculture studies and how social media is not about democratisation and public debate but rather about people picking what they like. An activist, Hannah Knight, acknowledges the discrimination women face until this day. However, when it comes to public sphere and social media, even though Knight argues there is a space for public debates, she says people are not listening to everyone. Social media empowers movements such as the Women’s March, but does it contribute towards democratisation, or do we just want to believe it does? Therefore, both the scholar, Ruddock and the activist Knight, have persuaded me that the concept of public sphere is no longer relevant when it comes to social media.

Friday, 1 September 2017

Westminster Student Blog Series

Journalism, the Filter Bubble and the Public Sphere

Author: Mick Kelly

"The influence of social media platforms and technology companies is having a greater effect on American journalism than even the shift from print to digital.”

(Bell and Owen, 2017)

This is the conclusion of a study released in March 2017 by researchers from Columbia University’s Graduate School of Journalism who investigated a journalism industry reacting to controversies about fake news and algorithmic filter bubbles that occurred at the time of the US presidential election. The report noted the following key points:

• Technology companies have become media publishers

• Low-quality content that is sharable and of scale is viewed as more valuable by social media platforms than high-quality, time-intensive journalism

• Platforms choose algorithms over human editors to filter content, but the ‘nuances of journalism require editorial judgment, so platforms will need to reconsider their approach’.

The report states that news might currently reach a bigger audience than ever before via social media platforms such as Facebook, but readers have no way of knowing how data influences the stories they read or how ‘their online behaviour is being manipulated’. (Bell and Owen, 2017)

This video assignment reveals that the debate has existed since 2011 when Eli Pariser wrote The Filter Bubble, which explained how data profiling led to personalisation and the algorithmic filtering of news stories. The theme of this video is the impact of this robotic process on journalism within the public sphere, and includes an interview with Jim Grice, who is Head of News and Current Affairs at London Live.

REFERENCE

Bell, E. and Owen, T. (2017) The Platform Press: How Silicon Valley reengineered journalism. The Tow Centre for Digital Journalism at Columbia University’s Graduate School of Journalism. Available from:

https://www.cjr.org/tow_center_reports/platform-press-how-silicon-valley-reengineered-journalism.php [Accessed 30 March 2017]

Friday, 25 August 2017

Westminster Student Blog Series

The Act of Sharing on Social Media

Author: Erxiao Wang

Social media has been the growing theme of the Information Age we live in. It is of considerable importance to understand, utilise, and engage wisely with social media platforms within our contemporary networked digital environment. This video is a theoretically inspired social media artefact; it introduces some of the key arguments in University of Westminster Professor Graham Meikle's book “Social Media: Communication, Sharing and Visibility”. This book includes theories such as the idea of media convergence, the business model of online sharing, the mediated online visibility and also the exploitable data that is subject to online surveillance. Moreover, Professor Meikle has also analysed the commercial Internet of Web 2.0 that enabled the user generated content and witnessed the coming together of the public and personal communication, and the always-on mobile connectivity has enabled greater mobility that allows social change. In order to make use of these key theories in our everyday practice and conversations of social media, I had a chat with a good friend of mine Junchi Deng, who is an accordion player who attends the Royal Academy of Music here in London and also posts and vlogs regularly. We talked about the act of sharing on social media, and the idea of promoting oneself on social networking sites, especially for a musician like himself. Our discussion centres around everyday use of social media, and our individual observations towards the incorporation and review of these platforms.

Friday, 4 August 2017

Terrorism and Social Media Conference Part Two

The 27th and 28th June saw the congregation of some of the world’s leading experts in counter-terrorism and 145 delegates from 15 countries embark on Swansea University’s Bay Campus for the Cyberterrorism Project’s Terrorism and Social Media conference (#TASMConf). Over the two days, 59 speakers presented their research into terrorists’ use of social media and responses to this phenomenon. The keynote speakers consisted of Sir John Scarlett (former head of MI6), Max Hill QC (the UK’s Independent Reviewer of Terrorism Legislation), Dr Erin Marie Saltman (Facebook’s Policy Manager for counter-terrorism and counter-extremism in Europe, the Middle East and Africa), Professor Philip Bobbitt, Professor Maura Conway and Professor Bruce Hoffman. The conference oversaw a diverse range of disciplines including law, criminology, psychology, security studies, linguistics, and many more. Amy-Louise Watkin and Joe Whittaker take us through what was discussed (blog originally posted here).

Tuesday, 1 August 2017

Terrorism and Social Media Conference Part One

The 27th and 28th June saw the congregation of some of the world’s leading experts in counter-terrorism and 145 delegates from 15 countries embark on Swansea University’s Bay Campus for the Cyberterrorism Project’s Terrorism and Social Media conference (#TASMConf). Over the two days, 59 speakers presented their research into terrorists’ use of social media and responses to this phenomenon. The keynote speakers consisted of Sir John Scarlett (former head of MI6), Max Hill QC (the UK’s Independent Reviewer of Terrorism Legislation), Dr Erin Marie Saltman (Facebook’s Policy Manager for counter-terrorism and counter-extremism in Europe, the Middle East and Africa), Professor Philip Bobbitt, Professor Maura Conway and Professor Bruce Hoffman. The conference oversaw a diverse range of disciplines including law, criminology, psychology, security studies, linguistics, and many more. Amy-Louise Watkin and Joe Whittaker take us through what was discussed (blog originally posted here).

Proceedings kicked off with keynotes Professor Bruce Hoffman and Professor Maura Conway. Professor Hoffman discussed the threat from the Islamic State (IS) and al-Qaeda (AQ). He discussed several issues, one of which was the quiet regrouping of AQ, stating that their presence in Syria should be seen as just as dangerous as and even more pernicious than IS. He concluded that the Internet is one of the main reasons why IS has been so successful, predicting that as communication technologies continue to evolve, so will terrorists use of social media and the nature of terrorism itself. Professor Conway followed with a presentation discussing the key challenges in researching online extremism and terrorism. She focused mainly on the importance of widening the groups we research (not just IS!), widening the platforms we research (not just Twitter!), widening the mediums we research (not just text!), and additionally discussed the many ethical challenges that we face in this field.

The key point from the first keynote session was to widen the research undertaken in this field and we think that the presenters at TASM were able to make a good start on this with research on different languages, different groups, different platforms, females, and children. Starting with different languages, Professor Haldun Yalcinkaya and Bedi Celik presented their research in which they adopted Berger and Morgan’s 2015 methodology on English speaking Daesh supporters on Twitter and applied this to Turkish speaking Daesh supporters on Twitter. They undertook this research while Twitter was undergoing major account suspensions which dramatically reduced their dataset. They compared their findings with Berger and Morgan’s study and a previous Turkish study, finding a significant decrease in the follower and followed counts, noting that the average followed count was even lower than the average Twitter user. They found that other average values followed a similar trend, suggesting that their dataset had less power on Twitter than previous findings, and that this could be interpreted as successful evidence of Twitter suspensions.

Next, we saw a focus away from the Middle East as Dr Pius Eromonsele Akhimien presented his research on Boko Haram and their social media war narratives. His research focused on linguistics from YouTube videos between 2014 when the Chobok girls were abducted until 2016 when some of the girls were released. Dr Akhimien emphasised the use of language as a weapon of war. His research revealed that Boko Haram displayed a lot of confidence in their language choice and reinforced this through the use of strong statements. They additionally used taunts to emphasise their control, for example, “yes I have your girls, what can you do?” Lastly, they used threats, and followed through with these offline.

Continuing the focus away from the Middle East, Dr Lella Nouri, Professor Nuria Lorenzo-Dus and Dr Matteo Di Cristofaro presented their inter-disciplinary research into the far-right’s Britain First (BF) and Reclaim Australia (RA). This research used corpus assisted discourse analysis (CADS) to analyse firstly why these groups are using social media and secondly, the ways in which these groups are achieving their use of social media. The datasets were collected from Twitter and Facebook using the social media analytic tool Blurrt. One of the key findings was that both groups clearly favoured the use of Facebook over Twitter, which is not seen to be the same in other forms of extremism. Also, both groups saliently used othering, with Muslims and immigrants found to be the primary targets. The othering technique was further analysed to find that RA tended to use a specific topic or incident to support their goals and promote their ideology, while BF tended to portray Muslims as paedophiles and groomers to support their goals and ideology.

Friday, 21 July 2017

Save your outrage: online cancer fakers may be suffering a different kind of illness

Peter Bath, University of Sheffield and Julie Ellis, University of Sheffield

Trust is very important in medicine. Increasing numbers of people are using the internet to manage their health by looking for facts about specific illnesses and treatments available. And patients, their carers and the public in general need to trust that this information is accurate, reliable and up to date.

Alongside factual health websites, the internet offers discussion forums, personal blogs and social media for people to access anecdotal information, support and advice from other patients. Individuals share their own experiences, feelings and emotions about their illnesses online. They develop relationships and friendships, particularly with people who have been through illnesses themselves and can empathise with them.

Some health professionals have concerns about the quality of medical information on the internet. But others are advocating that patients should be more empowered and encourage people to use these online communities to share information and experiences.

Within these virtual communities, people don’t just have to trust that the medical information they encounter is factually correct. They are also placing trust in the other users they encounter online. This is the case whether they are sharing their own, often personal, information or reading about the personal experiences of others.

Darker side to sharing

While online sharing can be very beneficial to patients, there is also a potentially darker side. There have been widely-publicised cases of “patients” posting information about themselves that is, at best, factually incorrect and might be considered deliberately deceptive.

Blogger Belle Gibson built a huge following after writing about being diagnosed with a brain tumour at the age of 20 and the experience of having just months to live. She blogged about her illness, treatment, recovery and eventual relapse while developing and marketing a mobile phone app, a website and a book. Through all of this she advocated diet and lifestyle changes over conventional medicine, claiming this approach been key to her survival.

But Gibson’s stories were later revealed to be part of a tangled web of deceit, which also involved her promising to donate money to charities but, allegedly, never delivering the payments.

In one sense, people’s trust was broken when they realised they had paid money under false pretences. In another sense, they may have followed Gibson’s supposed example of halting prescribed treatments and adopting a new diet and lifestyle when there was no real evidence this would work. But, at a deeper level, people may feel betrayed because they sympathised and indeed empathised with a person who was later revealed to be a fraud.

The truth was eventually publicised by online news outlets and Gibson was subject to complaints and abuse on social media. But there is something about the anonymity of the internet that facilitates this kind of deceptive behaviour in the first place. People are far less likely to be taken in by this sort of thing in the real world, but they are online. And it destroys people’s trust in online resources across the board.

Trust in extreme circumstances

Despite this, the moral outrage generated online by this kind of extreme and relatively isolated incident may be misplaced. There is evidence to suggest that people who do this may actually be ill but it’s a very difference sort of illness.

Faking diseases or illnesses – often described as Munchausen’s syndrome – is not unique to the internet and was reported long before its advent. The Roman physician Galen is credited with being the first to identify occasions on which people lied about or induced symptoms in order to simulate illness. More recently, the term “Munchausen by internet” has been used to describe behaviour in which people use chat rooms, blogs and forums to post false information about themselves to gain sympathy, trust or to control others.

Whichever way we view people who post such false information, their behaviour raises the question why people with genuine illnesses still share such intimate details when the potential for dishonesty from others is so evident. Our new research project, “A Shared Space and a Space for Sharing”, led by the University of Sheffield, is trying to understand how trust works in online spaces among people in extreme circumstances, such as the terminally ill.

![]() We need to know why people trust and share so much with others when they have never met them and when there is so much potential for deceit and abuse. It is also important to identify people who fake illness online if we are to ensure there is public trust in genuine online support platforms.

We need to know why people trust and share so much with others when they have never met them and when there is so much potential for deceit and abuse. It is also important to identify people who fake illness online if we are to ensure there is public trust in genuine online support platforms.

Peter Bath, Professor of Health Informatics, University of Sheffield and Julie Ellis, Research associate, University of Sheffield

This article was originally published on The Conversation. Read the original article.

Friday, 14 July 2017

Book review: The SAGE Handbook of Social Media Research Methods

Friday, 2 June 2017

Q&A Session with Authors of The SAGE Handbook of Social Media Research Methods

The editors Luke Sloan and Anabel Quan-Haase kindly responded to the questions that you submitted. If you missed the event, you can view the Q&A session here: https://storify.com/SAGE_Methods/q-a-with-anabel-quan-haase-and-luke-sloan

Let us know your thoughts by tweeting us @NSMNSS!

Friday, 19 May 2017

The SAGE Handbook of Social Media Research Methods – Questions for Authors

Friday, 12 May 2017

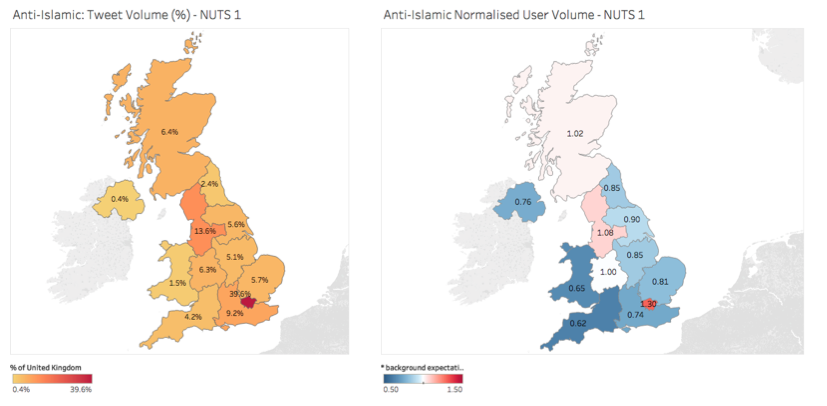

Anti-Islamic Content on Twitter

This analysis was presented at the Mayor of London’s Policing and Crime Summit on Monday 24 April, 2017.

The Centre for the Analysis of Social Media at Demos has been conducting research to measure the volume of messages on Twitter algorithmically considered to be derogatory towards Muslims over a year, from March 2016 to March 2017. This is part of a broad effort to understand the scale, scope and nature of uses of social media that are possibly socially problematic and damaging.

Over a year, Demos’ researchers detected 143,920 Tweets sent from the UK considered to be derogatory and anti-Islamic – this is about 393 a day. These Tweets were sent from over 47,000 different users, and fell into a number of different categories – from directed insults to broader political statements.

A random sample of hateful Tweets were manually classified into three broad categories:

- ‘Insult’ (just under half): Tweets used an anti-Islamic slur in a derogatory way, often directed at a specific individual.

- ‘Muslims are terrorists’(around one fifth) Derogatory statements generally associating Muslims and Islam with terrorism.

- ‘Muslims are the enemy’ (just under two fifths): Statements claiming that Muslims, generally, are dedicated toward the cultural and social destruction of the West.

The Brussels, Orlando, Nice, Normandy, Berlin and Quebec attacks all caused large increases. There was a period of heightened activity over Brexit, and sometimes online ‘Twitter storms’ (such as the use of derogatory slurs by Azealia Banks toward Zayn Malik) also drove sharp increases.

Tweets containing this language were sent from every region of the UK, but the most over-represented areas, compared to general Twitter activity, were London and the North-West.

Of the 143,920 Tweets containing this language and classified as being sent from within the UK, 69,674 (48%) contained sufficient information to be located within a broad area of the UK. To measure how many Tweets each region generally sends, a random baseline of 67 Million Tweets were collected over 19 days over late February and early March. The volume of Tweets containing derogatory language towards Muslims was compared to this baseline. This identified regions where the volume was higher or lower than the expectation on the basis of general activity on Twitter.

In London, North London sent markedly more tweets containing language considered derogatory towards Muslims than South London.

27,576 (39%) tweets were sent from Greater London. Of these, 14,953 Tweets (about half) could be located to a more specific region within London (called a ‘NUTS-3 region’; typically either a London Borough or a combination of a small number of London Boroughs).[1]

- Brent, Redbridge and Waltham Forest sent the highest number of derogatory, anti-Islamic Tweets relative to their baseline average of general Twitter activity.

- Westminster and Bromley sent the least number of derogatory, anti-Islamic Tweets relative to their baseline average of general Twitter activity.

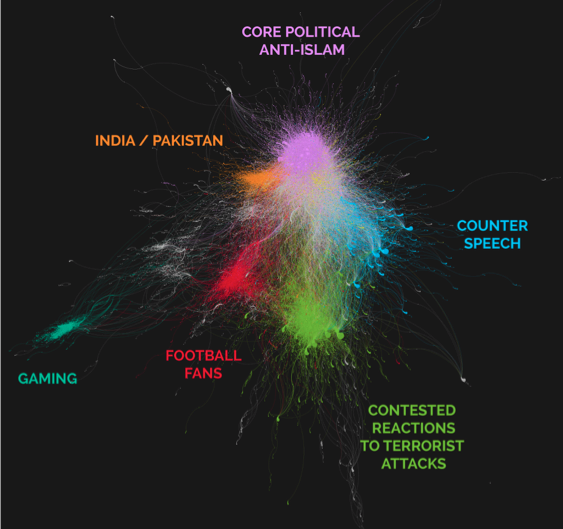

Demos’ research identified six different online tribes. [2] These were:

Core political anti-Islam. The largest group of about 64,000 users including recipients of Tweets. Politically active group engaged with international politics.

- Hashtags employed by this group suggest engagement in anti-Islam and right wing political conversations: (#maga #tcot #auspol #banIslam #stopIslam #rapefugees)

- In aggregate, words in user descriptions emphasise nationality, right-wing political interest and hostility towards Islam (anti, Islam, Brexit, UKIP, proud, country)

- Aggregate overview of user descriptions imply a relatively young group (sc, snapchat, ig, instagram, 17,18,19,20, 21)

- User descriptions also imply a mix of political opinion (blacklivesmatter, whitelivesmatter, freepalestine)

- Hashtags engage in conversations emerging in the aftermath of terrorist attacks (#prayforlondon, #munich, #prayforitaly, #prayforistabul, #prayformadinah, #orlando)

- Likewise, hashtags are a mix of pro- and anti-Islamic (#britainfirst, #whitelivesmatter, #stopislam, #postrefracism, #humanity)

The shape of the cluster shows a smaller number of highly responded to-/retweeted comments.

- Hashtags engage predominantly with anti-racist conversations (#racisttrump, postrefracism, #refugeeswelcome, #racism, #islamophobia)

- In aggregate, user descriptions show mix of political engagement and general identification with left-wing politics (politics, feminist, socialist, Labour).

- Overall they also show more descriptions of employment than the other clusters (writer, author, journalist, artist).

- The bio descriptions of users within his cluster overwhelmingly contain football-related words (fan, football, fc, lfc, united, liverpool, arsenal, support, club, manchester, mufc, chelsea, manutd, westham)

- No coherent use of hashtags. This cluster engaged in lots of different conversations.

- Hashtags overwhelmingly engage in conversation to do with India-Pakistan relations or just Pakistan (#kashmir, #surgicalstrike, #pakistan, #actagainstpak).

- In aggregate, words in user descriptions relate to Indian/nationalist identity and pro-Modi identification (proud, Indian, hindu, proud indian, nationalist, dharma, proud hindu, bhakt,)

- There is no coherent use of hashtags.

- Overall, aggregate comments in user descriptions either imply young age (16,17,18) or are related to gaming (player, cod [for ‘Call of Duty’], psn)

- 50% of Tweets classified as containing language considered anti-Islamic and derogatory are sent by only 6% of accounts

- 25% of Tweets classified as containing language considered anti-Islamic and derogatory were sent by 1% of accounts.

The full paper, outlining methodology and ethical notes, can be downloaded here.

NOTES –

[1] An important caveat is that the volumes associated with each of these regions are obviously smaller than the total number of Tweets in the dataset overall

[2] A caveat here is that this network graph includes Tweets that are misclassified and also includes the recipients of abuse. It is also important to note that not everyone who shares Tweets does so with malicious intent; they can be doing so to highlight the abuse to their own followers.

[3] In other work on the subject we have found there are usually more posts about solidarity, support for Muslims than attacks on them.